How ADAS sensor innovation protects road safety and reduces traffic accidents

Since the first introduction of anti-lock braking systems (ABS) in the 1970s, the use of ADAS technology in passenger vehicles has steadily increased, and safety has improved accordingly. According to estimates by the National Safety Council (NSC), in the United States alone, ADAS has the potential to prevent approximately 62% of traffic fatalities, saving more than 20,000 lives each year. In recent years, ADAS features such as automatic emergency braking (AEB) and forward collision warning (FCW) have become increasingly common, with more than a quarter of vehicles equipped with these features to help drivers prevent accidents and ultimately save lives.

Traffic safety is a huge challenge - more than 1.1 million people are killed each year in road traffic accidents, and an estimated 20 to 50 million more are injured.

A major cause of these accidents is driver error. Automakers and government regulators are constantly looking for ways to improve safety, and in recent years, advanced driver assistance systems (ADAS) have made great strides in helping to reduce road casualties.

In this article, we will explore the role of ADAS in improving road safety and the various sensor technologies that are critical to achieving this goal.

The Evolution and Importance of ADAS

Since the first introduction of anti-lock braking systems (ABS) in the 1970s, the use of ADAS technology in passenger vehicles has steadily increased, with corresponding improvements in safety. The National Safety Council (NSC) estimates that in the United States alone, ADAS has the potential to prevent approximately 62% of traffic fatalities, saving more than 20,000 lives each year. In recent years, ADAS features such as automatic emergency braking (AEB) and forward collision warning (FCW) have become increasingly prevalent, with more than a quarter of vehicles equipped with these features to help drivers prevent accidents and ultimately save lives.

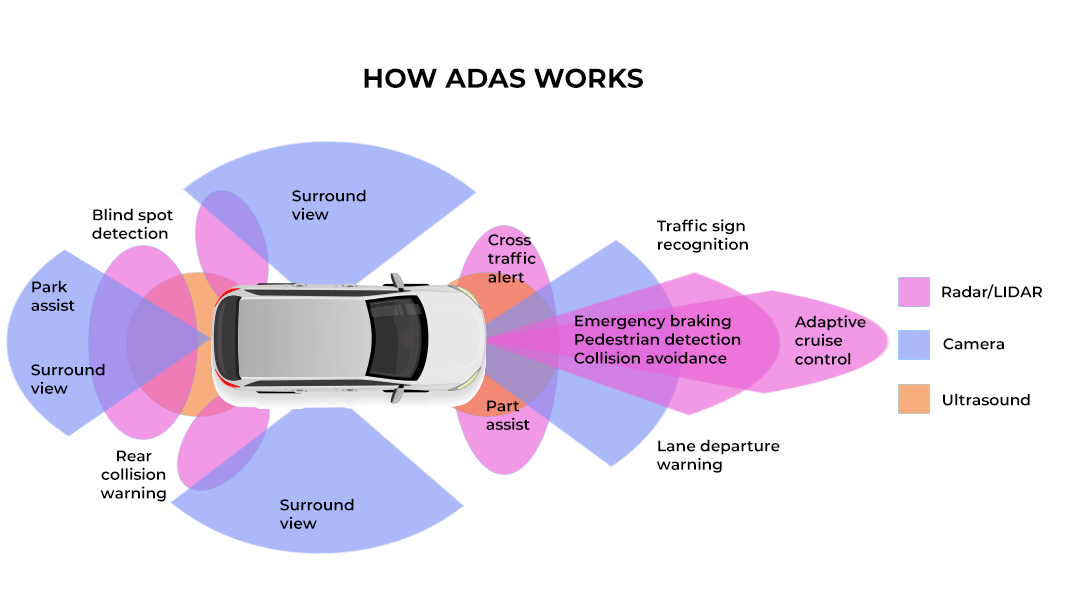

ADAS requires multiple technologies to work together. A perception suite acts as the system’s “eyes,” detecting the vehicle’s surroundings and providing data to the system’s “brain,” which uses this data to calculate vehicle execution decisions to assist the driver—for example, when a vehicle is detected ahead and the driver does not apply the brakes, AEB automatically applies the brakes to stop the vehicle in time to avoid a rear-end collision. The ADAS perception suite consists of a vision system that includes an automotive-grade camera with a high-performance image sensor at its core that captures a video stream of the vehicle’s surroundings for detecting vehicles, pedestrians, traffic signs, etc., and displays these images to assist the driver in low-speed driving and parking situations. The camera is often paired with a depth perception system such as millimeter-wave radar, LiDAR, or ultrasonic sensors, which provide depth information to enhance the camera’s 2D image, add redundancy, and remove ambiguity in object distance measurements.

Implementing ADAS systems can be a challenge for automakers and their Tier 1 system suppliers: processing power is limited to handle all the data generated by multiple sensors, and the sensors themselves have performance limitations. The automotive industry’s requirements dictate that each component must be extremely reliable, not only in hardware but also in the associated software algorithms, which require extensive testing to ensure safety. The system must also maintain stable performance in the harshest lighting and weather conditions, be able to handle extreme temperatures, and operate reliably throughout the vehicle’s lifecycle.

How ADAS Works

Key Sensor Technologies in ADAS Systems

Let’s now take a closer look at some of the key sensor technologies used in ADAS, including image sensors, LiDAR, and ultrasonic sensors. Each sensor provides a specific type of data that is processed and combined with software algorithms to generate an accurate and comprehensive understanding of the environment. This process, known as sensor fusion, improves the accuracy and reliability of software perception algorithms through the redundancy of multiple sensor modalities, enabling higher levels of safety through higher confidence decisions. The complexity of these multi-sensor suites can quickly rise, and the algorithms require more and more processing power. At the same time, the sensors themselves are becoming more advanced, allowing for local processing at the sensor level rather than on a central ADAS processor.

▶ Automotive Image Sensors

Image sensors are the “eyes” of the vehicle—arguably the most important type of sensor in any ADAS-equipped vehicle. Image sensors provide image data that enables a wide range of ADAS features, from “machine vision” driver assistance features such as automatic emergency braking, forward collision warning, and lane departure warning, to “human perspective” features such as 360-degree surround view cameras for parking assistance and camera monitoring systems for electronic mirrors, to driver monitoring systems that detect distracted or fatigued drivers and sound an alert to prevent accidents.

▶ Depth Sensors (LiDAR)

Accurately measuring the distance between an object and the sensor is known as depth perception. Depth information removes ambiguity from a scene and is essential for a wide range of ADAS features and for achieving higher levels of ADAS and fully automated driving.

There are a variety of technologies available for depth perception. If depth performance is a concern, light detection and ranging (LiDAR) is the best choice. LiDAR enables depth perception with high depth and angular resolution, and because the system uses near-infrared (NIR) lasers in conjunction with the sensor for active illumination, it works in all ambient light conditions. It is suitable for both close-range and long-range applications. While low-cost mmWave radar sensors are more prevalent in today’s automotive applications, they lack the angular resolution of LiDAR to provide the kind of high-resolution 3D point cloud environmental information required for higher levels of autonomous driving beyond basic ADAS needs.

The most common LiDAR architecture is direct time-of-flight (ToF), which directly calculates distance by emitting a short pulse of infrared light and measuring the time it takes for the signal to reflect from an object and return to the sensor. LiDAR sensors replicate this measurement process by scanning light across their field of view to capture the entire scene.

▶ Ultrasonic Sensors

Another technology used for distance measurement is ultrasonic detection, which uses a sensor to emit sound waves at frequencies above the human hearing range and then detect the sound that bounces back, measuring distance using time-of-flight.

Ultrasonic sensors can be used for close-range obstacle detection and low-speed maneuvering applications such as parking assist. One advantage of ultrasonic sensors is that sound is much slower than light, so the time it takes for a reflected sound wave to return to the sensor is typically microseconds, compared to nanoseconds for light, which means that ultrasonic sensors require much less processing performance, reducing system cost.

An example of an ultrasonic sensor is the ON Semiconductor NCV75215 Parking Distance Measurement ASSP. This component uses a piezoelectric ultrasonic transducer to perform time-of-flight measurements of the distance to obstacles during the vehicle parking process. It can detect objects at distances from 0.25 meters to 4.5 meters with high sensitivity and low noise.

Conclusion

The automotive industry continues to invest heavily in ADAS and pursue the goal of fully autonomous vehicles - moving beyond basic driver assistance functions defined by SAE (i.e. Level 1 and Level 2) to true autonomous driving capabilities (i.e. Level 3, Level 4 and Level 5 defined by SAE). Reducing road casualties is one of the main drivers behind this trend, and sensor technology plays a vital role in the transformation of automotive safety.